|

Kyriakos G. Vamvoudakis - NSF CAREER CPS-1851588, CPS-1750789

Funding Source: NSF CAREER CPS-1851588, NSF CAREER CPS-1750789

|

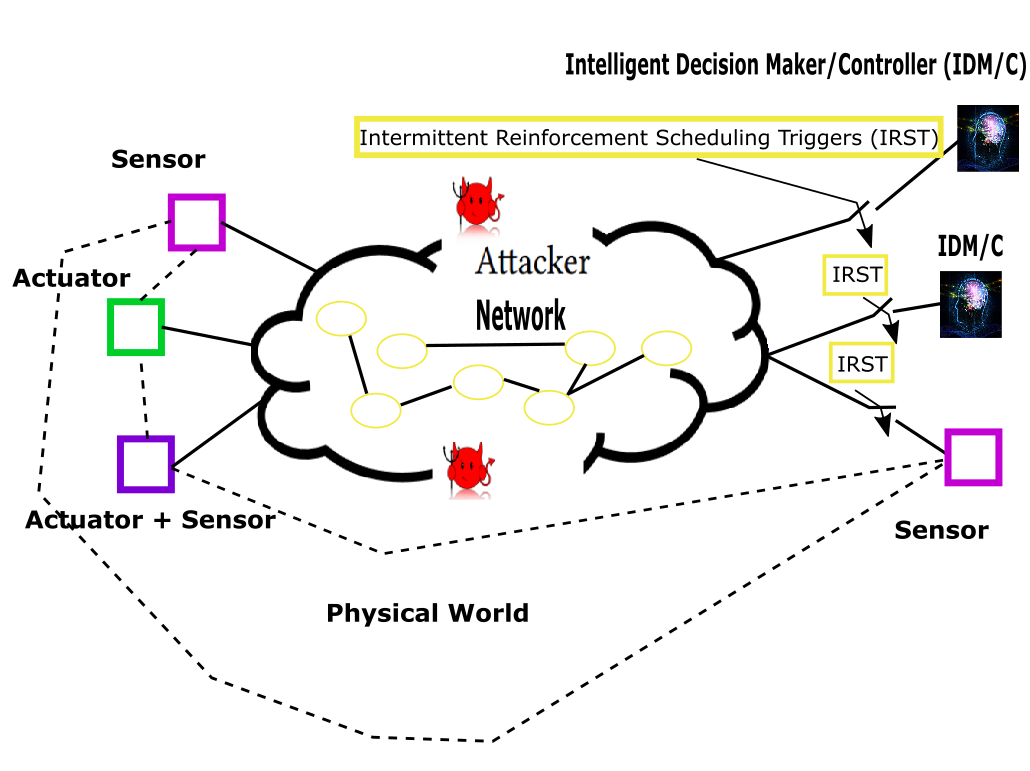

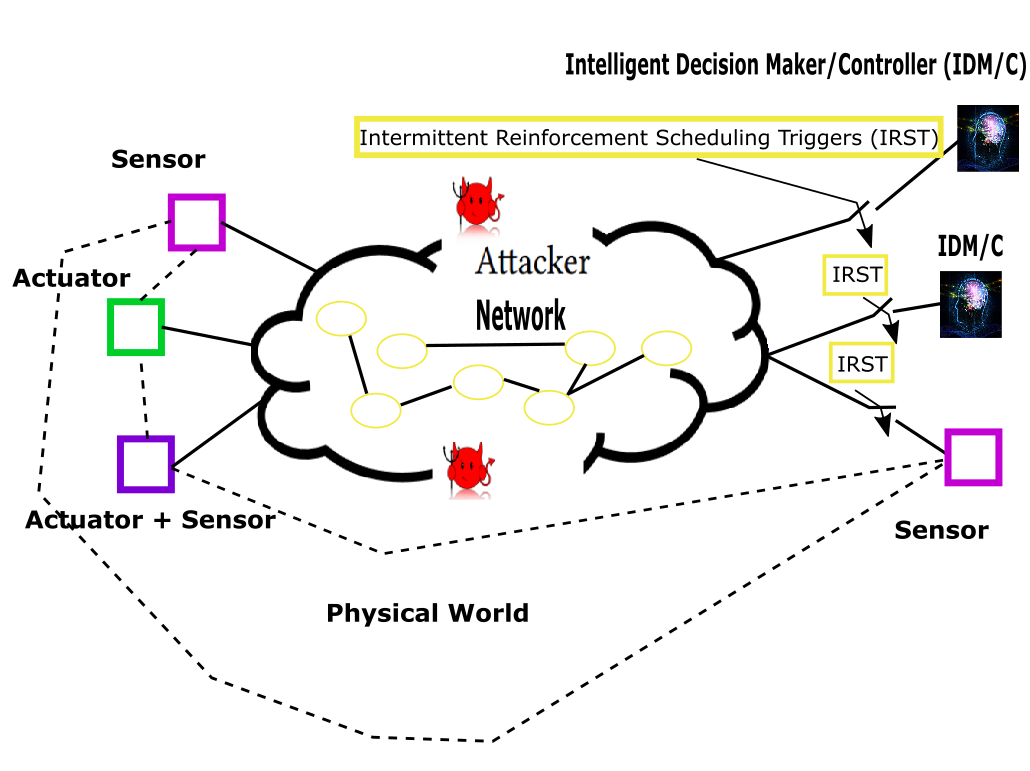

Intermittent learning for smart and efficient

CPS. The two important components of our framework

are the intelligent decision maker/controller

(IDM/C) and the intermittent reinforcement scheduling

triggers (IRST). Both of these components will be

independent of the CPS model and the environment.

|

Intellectual Merit

|

| Current learning algorithms cannot be easily applied in CPS due to their need for

continuous and expensive updates, with the current triggered frameworks having fundamental limitations.

Such limitations lead to the following questions. How can we incorporate and fully adapt to totally

unknown, dynamic, and uncertain environments? How do we co-design the action and the intermittent

schemes? How can we provide quantifiable real-time performance, stability and robustness guarantees by

design? And how do we solve congestion and guarantee security? We will build on our multiyear experience

and develop fundamental contributions to CPS by providing novel frameworks that will allow the

fully autonomous operation in the face of unknown, bandwidth restricted, and adversarial environments.

As specific merits, the project will, (i) unify new perspectives of learning in engineering to enable smart

autonomy, resiliency, bandwidth efficiency, robustness, real-time optimality and adaptation that cannot

be achieved with the state-of-the-art approaches; (ii) develop intermittent deep learning methods for CPS

that can mitigate sensor attacks by dynamically isolating the suspicious components and can handle cases

of limited sensing capabilities; (iii) incorporate nonequilibrium game-theoretic learning in CPS with

components that do not share similar mechanisms for decision making and do not have the same level

of rationality due to heterogeneity and may differ in either their information obtained or their ability in

utilizing it; and (iv) investigate ways to transfer intermittent learning experiences among the agents.

|

Broader Impact

|

| The overarching goal of this research is to impact as well as deepen ties between the

learning, control, game theory, and the CPS communities and will provide foundational knowledge on

which future CPS can be built. In addition to being translational to systems that intermittently learn to improve

performance in real-time, we anticipate that it will significantly increase economic competitiveness

of the US and have cross domain applications. The developments are expected to motivate engineers, psychologists,

and computer scientists to address realistic instances of intermittent learning.

We specifically

plan to (i) train and educate a new generation of students, from multiple disciplines and experience levels,

in a transdisciplinary manner and through internships at industrial research labs that will lead

to technology transfer; (ii) develop and integrate two new courses into the curriculum that will facilitate

transferring research results to education through a set of interlinked research projects; (iii) leverage the

outreach efforts of Georgia Tech to allow mentoring high-school teachers, organize and participate in

summer camps for students (K-12) with demographic diversity and diverse backgrounds with a special

focus on reaching out to underrepresented minorities and women to motivate their interest in autonomy

through design projects, while also increasing retention rates; (iv) organize special journal issues, tutorials,

and invited conference sessions, and at the end of the project, prepare a research monograph; (v)

arrange drone races between autonomous and non autonomous drones; and (vi) foster collaboration with KTH, Sweden and the University of Bristol, UK through student exchange programs.

|

|

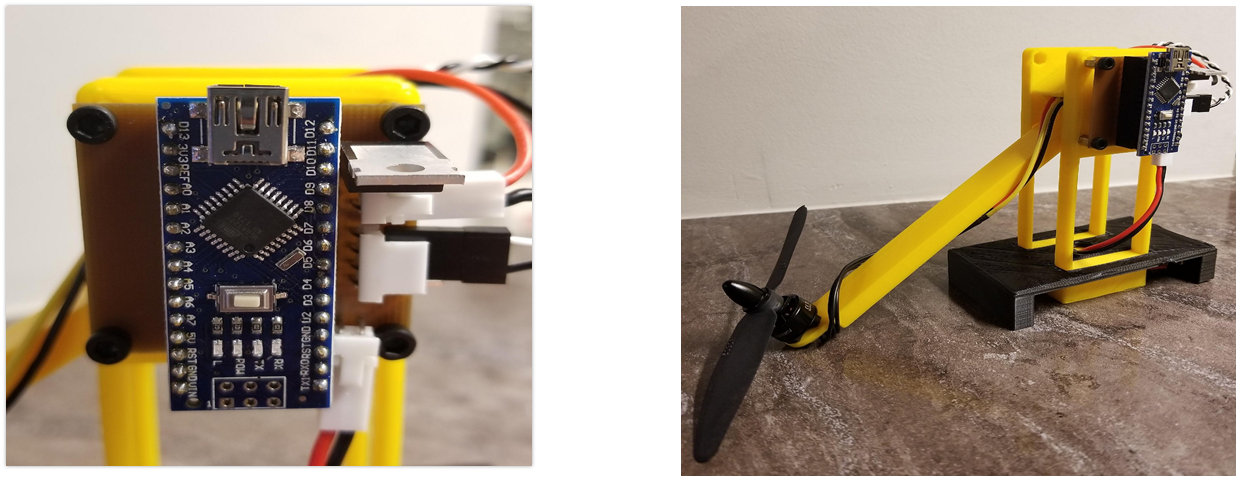

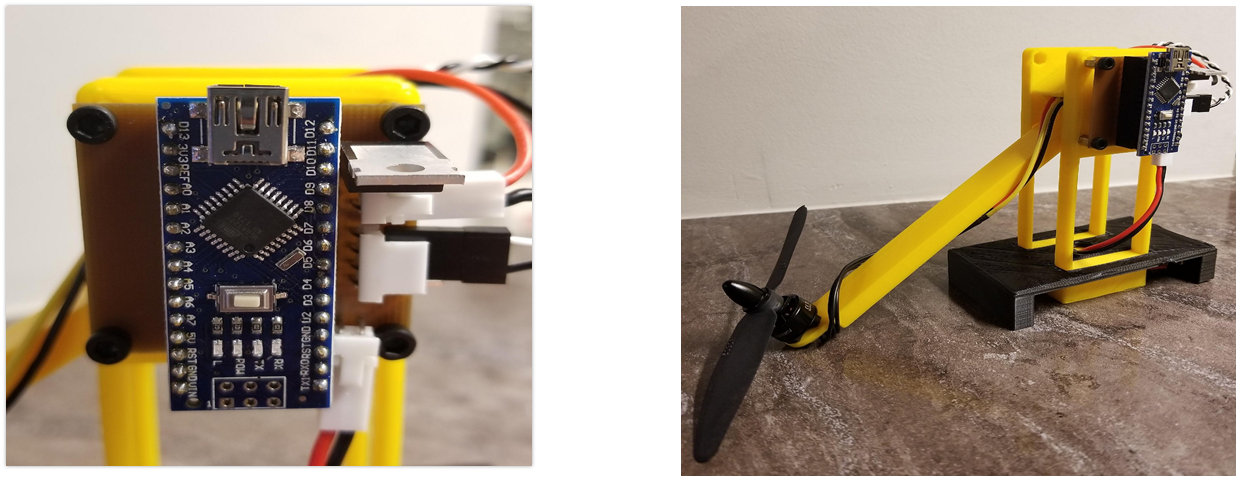

Incorporation of a simple, compact, and affordable personal control system in undergraduate class in collaboration with Eric Feron.

|

Publications

|

| All the results, including papers, reports, and software are available freely to the research community through the world-wide-web. The course materials (including lecture notes, homeworks, laboratory materials, etc.) are also freely available to the academic community.

The publications based upon research funded by this project can be found here.

|

Recent Keynote, Plenary Talks, and Events

|

|

-

Tutorial on From Perception to Planning and Intelligence: A Hands-on Course on Robotics Design and Development using MATLAB and Simulink, “Reinforcement Learning for Robotics,” Invited Speaker, International Conference on Robotics and Automation (ICRA), Paris France, 2020.

-

Workshop on High Dimensional Hamilton-Jacobi Methods in Control and Differential Games, “Solving Game-Theoretic Hamilton-Jacobi Equations in a Model-Free Way,” Invited Keynote Speaker, Institute for Pure and Applied Mathematics (IPAM), UCLA, Los Angeles, CA, 2020.

-

University Tennessee Knoxville, “An Intermittent Learning Framework for Smart, Secure, and Efficient Cyber-Physical Autonomy,” Seminar, Department of Industrial and Systems Engineering, Knoxville, TN, 2020.

-

Florida Atlantic University, “An Intermittent Learning Framework for Smart, Secure, and Efficient Cyber-Physical Autonomy,” Seminar, Department of Electrical and Computer Engineering, Boca Raton, FL, 2020.

-

Workshop on Artificial Intelligence for Robust Engineering & Science (AIRES), “Robust and Secure Reinforcement Learning for Prediction and Control,” Invited Speaker, Oak Ridge National Laboratory, Oak Ridge, TN, 2020.

-

Workshop on Verifiable Adaptive Control Systems and Learning Algorithms, “Verifiable Reinforcement Learning in Control Applications,” Invited Speaker, IEEE Conference on Decision and Control (CDC), Nice, France, 2019.

-

North Carolina A&T State University, “Kinodynamic Motion Planning with Q-Learning: An Online, Model-Free, and Robust Navigation Framework,” Seminar, Department of Electrical and Computer Engineering, Greensboro, NC, 2019.

-

NSF Workshop on Robot Learning, “A Safe and Model-Free Kinodynamic Motion Planning,” Invited Speaker, Lehigh, PA, 2019.

-

Inaugural Conference on Learning for Dynamics and Control (L4DC), MIT, Boston, MA, 2019.

-

Workshop on Data-Driven and Machine Learning enabled Control, “Predictive Learning and Lookahead Simulation,” Invited Speaker, IEEE Conference on Control Applications and Technology (CCTA), Hong Kong, China, 2019.

-

University of New Mexico, “A Cyber-Physical System Learning Framework for Smart, Secure, and Efficient Autonomy,” Seminar, Department of Electrical and Computer Engineering, Albuquerque, NM, 2019.

-

ARO Workshop on Distributed Reinforcement Learning and Reinforcement Learning Games, “Non-Equilibrium Learning and Security,” Invited Speaker, College Park, MD, 2019.

-

Georgia Institute of Technology, “Autonomy and Security of Cyber-Physical Systems,” Inaugural Lecture, School of Aerospace Engineering Student Advisory Council, Atlanta, GA, 2018.

-

Syracuse University, “A Control Learning Framework for Smart, Secure, and Efficient Cyber-Physical Autonomy,” Department Seminar Speaker Series, Department of Mechanical and Aerospace Engineering, Syracuse, NY, 2018.

-

NSF CPS PI Meeting Workshop, “Recent Developments on Autonomy and Security of Cyber-Physical Systems,” Organizer and Speaker, Alexandria, VA, 2018. (Invited speakers are: J. P. Hespanha, G. J. Pappas, P. Tabuada, R. Gerdes, F. Pasqualetti, Y. Wan)

-

Symposium on Control Systems and the Quest for Autonomy (in Honor of Professor Panos J. Antsaklis), University of Notre Dame, South Bend, IN, 2018.

-

University of California, Santa Barbara (UCSB), “A Control Learning Framework for Smart, Secure, and Efficient Cyber-Physical Autonomy,” Center for Control, Dynamical-systems, and Computation (CCDC) Seminar, Santa Barbara, CA, 2018.

-

American Association for the Advancement of Science (AAAS), “A Q-learning Framework for Unknown Networked Systems,” Invited Speaker, Washington, DC, 2018.

-

Georgia Institute of Technology, “A Control Learning Framework for Smart, Secure, and Efficient Cyber-Physical Autonomy,” Decision and Control Laboratory Seminar Speaker Series, Atlanta, GA, 2018.

-

Workshop on New Problems on Learning and Data Science in Control Theory, “A Cooperative Q-learning Framework in Unknown Networked Systems,” Invited Speaker, American Control Conference, Milwaukee, WI, 2018.

-

MathWorks Research Summit, “A Reinforcement Learning Framework for Smart, Secure and Efficient Cyber-Physical Autonomy,” Plenary Speaker, Newton, MA, 2018.

-

University of Illinois at Urbana-Champaign (UIUC), “A Control Learning Framework for Smart, Secure, and Efficient Cyber-Physical Autonomy,” Seminar Speaker Series, Coordinated Science Laboratory, Urbana, IL, 2018.

|

Service and Outreach

|

|

-

Posters and Demos Chair,

International Conference on Distributed Computing in Sensor Systems (DCOSS), Los Angeles, CA, 2020.

-

General Co-Chair,

9th International Conference on the Internet of Things (IoT), Bilbao, Spain, 2019.

-

Program Chair, 8th International Conference on the Internet of Things (IoT), Santa Barbara, CA, 2018.

-

Participated in the Student Transition Engineering Program (STEP) five-week orientation program and provided classes on autonomy, Virginia Tech, Summer 2018.

-

Participated in the Center for the Enhancement of Engineering Diversity (CEED) C-Tech two-week all women summer camp and provided a class on learning-control and autonomy, Summer 2017.

|

Honors and Awards

|

|

-

Army Research Office (ARO) Young Investigator Program (YIP) Award, 2019.

-

Paper recognized as one of the Google Scholar Classic Papers in the area of Automation and Control Theory, 2018.

-

Paper on “Optimal and Autonomous Control Using Reinforcement Learning: A Survey,”

is listed as a 2018 Publication Spotlight of the

IEEE Computational Intelligence Society.

-

Paper recognized as one of the Google Scholar Classic Papers in the area of Automation and Control Theory, 2018.

|

Relevant Courses

|

|

-

8750/8751: Robotics Research Fundamentals I and II, Spring 2024.

-

AE 8803: Intelligent Cyber-Physical Systems, Spring 2021.

-

AE 8803: Optimization-Based Learning Control and Games, Fall 2020.

-

AE 8803: Cyber-Physical Systems and Distributed Control, Spring 2019.

-

AE 3531: Control Systems Analysis and Design, Fall 2018, 2019.

-

AE 4610: Dynamics and Control Laboratory, Spring 2022.

|

|